With the advancement in AI, the need to get larger datasets has increased, as these can be quite important to do things like sentiment analysis, get a good view of the current ongoing trends, or even perform a competitor analysis.

Luckily for us,when we scrape Facebook ,it can provide us with a large amount of useful data that we can use. But the one thing that comes as an obstacle is the IP bans.

Follow along so that you can create your very own Facebook Scraper:

What Exactly is Facebook Scraping?

First things first: Before scraping Facebook, shouldn’t we be looking into what exactly Facebook scraping is?

Well, for starters, it is a data extraction technique that can automatically gather information from the Facebook platform. Although this sounds like an easy process, it’s not. Rather, it involves the systematic retrieval of data points from various sections of the Facebook platform, such as user profiles, posts, likes, comments, and followers, and it is quite useful in many places, such as

- In Market Research, it can help analyze market trends, consumer behavior, and competitor strategies.

- In Brand Monitoring, Tracking brand mentions, sentiment, and discussions on Facebook by scraping for tags and comments relevant to the brand’s keywords

- In Customer Feedback and Sentiment Analysis, FB scraping can help extract and analyze comments and reactions on posts to figure out overall customer satisfaction.

Scrape Facebook Step by Step

Setting up the environment:

First, to scrap Facebook, you must install the latest version of Python on your machine. For this particular tutorial, we recommend that you get Python 3.9. Get to their official website and install it on your device. Other than that, we will be requiring two libraries to do scraping.

BeautifulSoup:

Beautiful Soup is one of the most famous and specific design libraries intended to get the data out of HTML and XML files. It has many functions that you can use to iterate, search, and modify the parse tree.

Just use the pip command in your terminal to install the Beautiful Soup.

pip install beautifulsoup4

Selenium:

BeautifulSoup is a really good web scraping library, but it can be a little tough for BS4 when it comes to dynamic web pages. So, for dynamic web pages and interacting with the JS side of the website, we will be using Selenium.

You can install the selenium library using the following pip command:

pip install selenium

One of the few important things you need to remember is that Selenium requires a web driver to interact with the web pages. You can go with any browser you choose; you just need to download the appropriate driver and make it accessible to Selenium in your script. For this tutorial, we will be using Chromium.

After successfully installing these things, you are all well equipped to start Facebook scraping.

Next up, just set up the project folder. Go into your terminal and write the following command.

mkdir fb-scraper-using-python

What kind of data will we be actually scraping?

For this particular tutorial, we will scrape the phone number, email, contact details, and address; once you get the basics, you can modify the code according to your needs.

So, to start the scraping, you will need a method to extract the raw materials from the Target’s HTML. We will be using Selenium for that particular case, and then we can use the inbuilt functions of BS4 to parse our relevant data from the HTML.

Then open the cmd in the project directory and open your favorite code editor; for this, we will use Visual Studio Code; you can create any of your choices.

Then, create a new file named script.py and start writing the code,

Importing the Libraries

Start off by importing the important libraries, in our case we will be having only 3 of them, in edition to the libraries we will be making use of time as well.

from bs4 import BeautifulSoup

from selenium import webdriver

import time

Initializing the Variables

Next up we will be initializing the variables that we will be using to store the scraped data. In our case result_list is an empty list that will store dictionaries containing contact details. current_entry is an empty dictionary that will hold individual contact details before being added to result_entries.

result_entries = []

current_entry = {}

Defining the Target URL and Start the WebDriver

Afterward, we will be specifying the custom_website_url website ( the one that we will be using to scrape data from); here’s where you can do most of the customization. For instance, you can well set up a list of Facebook pages that you want to scrape from; for our tutorial, we are only using a simple webpage, but you can adjust that according to your needs; we are creating a new Chrome web driver using the web driver.Chrome() function.

custom_website_url = "https://www.facebook.com/gillette"

web_driver_instance = webdriver.Chrome()

Open the URL, Wait, and Get the Page Source

Next up, we want to open up our specified website URL in our browser, so we will be using the web_driver_instance we defined in our previous step and will be calling the .get() function and passing the website URL to it. What it will do is open the URL in the specified browser; we can specify the time for which the script will be paused so that the web pages can be fully loaded.

After the webpage has opened, we will store the webpage HTML using the .page_source attribute and will store it to the webpage_source variable, and then we can close the web browser instance; we can use it for loops over here if you want to scrap more than one website, given you have a list of webpages you want to scrap from.

For this tutorial, we will be keeping things simple; that’s why one URL is sufficient.

web_driver_instance.get(custom_website_url)

time.sleep(5)

webpage_source = web_driver_instance.page_source

web_driver_instance.close()

Parsing HTML with BeautifulSoup

Now, in order to start the filter out the important, relevant details, we need to create a BeautifulSoup. We can do that by passing the BeautifulSoup() function, the webpage_source(the variable that contains the target’s website HTML) since this is an HTML parser, so we will pass it as well.

parsed_html = BeautifulSoup(webpage_source, 'html.parser')

Finding Target Elements

Now, this step can vary in your use case. Since we are just trying to fetch the company’s information, we will pass the relevant div classes to it; for your case, if you want to scrape posts or comments, you should use those variables.

target_sections = parsed_html.find_all('div', {'class': 'x9f619 x1n2onr6 x1ja2u2z x78zum5 x2lah0s x1qughib x1qjc9v5 xozqiw3 x1q0g3np x1pi30zi x1swvt13 xyamay9 xykv574 xbmpl8g x4cne27 xifccgj'})[1]

all_custom_details = target_sections.find_all("div", {"class": "x9f619 x1n2onr6 x1ja2u2z x78zum5 x2lah0s x1nhvcw1 x1qjc9v5 xozqiw3 x1q0g3np xyamay9 xykv574 xbmpl8g x4cne27 xifccgj"})

Iterating Through Custom Details and Extracting Relevant Information

In this, we use our logic to iterate through every detail and filter out the relevant information.

for custom_detail in all_custom_details:

check_custom_condition = len(custom_detail.text.split(","))

if check_custom_condition > 2:

try:

current_entry["custom_info"] = custom_detail.text

except:

current_entry["custom_info"] = None

continue

check_another_condition = len(custom_detail.text.split("-"))

if check_another_condition > 2:

try:

current_entry["another_info"] = custom_detail.text

except:

current_entry["another_info"] = None

continue

if '@' in custom_detail.text:

try:

current_entry["email_info"] = custom_detail.text

except:

current_entry["email_info"] = None

continue

“And then finally print out the final result”

print(result_entries)

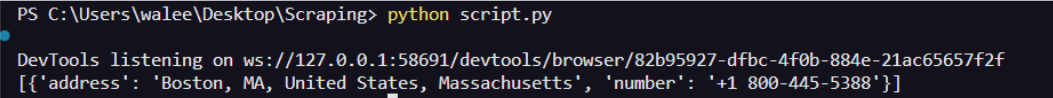

Save this to script.py, and then in the terminal, write the command following command and see the result:

Problems encountered when scraping Facebook

The common problem encountered during scraping Facebook are IP bans; the reason for this, for instance we were scraping for more than one site, then the browser would have iteratively fetched all of the target’s website; what it can lead to is IP bans, the solution to this can be by using rotating proxies along with the scraping, so if request to the target websites can be made from different IP address which can reduce the issues with IP addresses. So proxies are your must to go with a solution and almost every scraping process.

How to Choose the best Provider for your Scraping Needs?

With tons of proxy services, it can be hard to select the best one, right? With Peta Proxy, almost every proxy relation problem can be solved.

PetaProxy provides you with almost every solution you could dream of From data center proxies to mobile proxies, they have got everything covered, so whether you are seeking help with social media scraping or just scraping large-scale data for analysis, the best option you can go with is PetaProxy. Furthermore, Petaproxy promises high uptime rates and smooth operations, ensuring your tasks continue unhindered.

Conclusion

Finally, knowing how to scrape Facebook with Python opens up a lot of exciting methods to get useful information. We can learn about market trends, customer behavior, and rival tactics by pulling information from user profiles, posts, and comments. Dealing with IP bans, on the other hand, is hard. To get around this problem, you need to use Rotating Proxies like those offered by PetaProxy to ensure that scraping processes go smoothly without any issues. If you have the right tools and know-how, Facebook scraping isn’t just a technical job; it’s also useful for research and making smart decisions in many areas.